Blog

Navigating three privacy pitfalls of AI adoption

Trustworthy AI extends well beyond just privacy, spanning security and ethical considerations as well. But in order to get AI right, you need to first get data privacy right. Learn more about three common privacy pitfalls in AI adoption, and how you can avoid them

Bex Evans

Senior Product Marketing Manager

June 10, 2024

Gartner® predicts that “By 2026, more than 80% of enterprises will have used generative artificial intelligence (GenAI) application programming interfaces (APIs) or models, and/or deployed GenAI-enabled applications in production environments, up from less than 5% in 2023”1

At the same time, according to Gartner Hype Cycle Methodology, “Interest wanes as experiments and implementations fail to deliver. Producers of the technology shake out or fail. Investments continue only if the surviving providers improve their products to the satisfaction of early adopters.”2

Research from Forrester identifies data privacy and security concerns as the top barrier to generative AI adoption. The promotion of trustworthy AI extends well beyond the privacy domain, spanning security and ethical considerations as well. But in order to get AI right, you first need to get data privacy right.

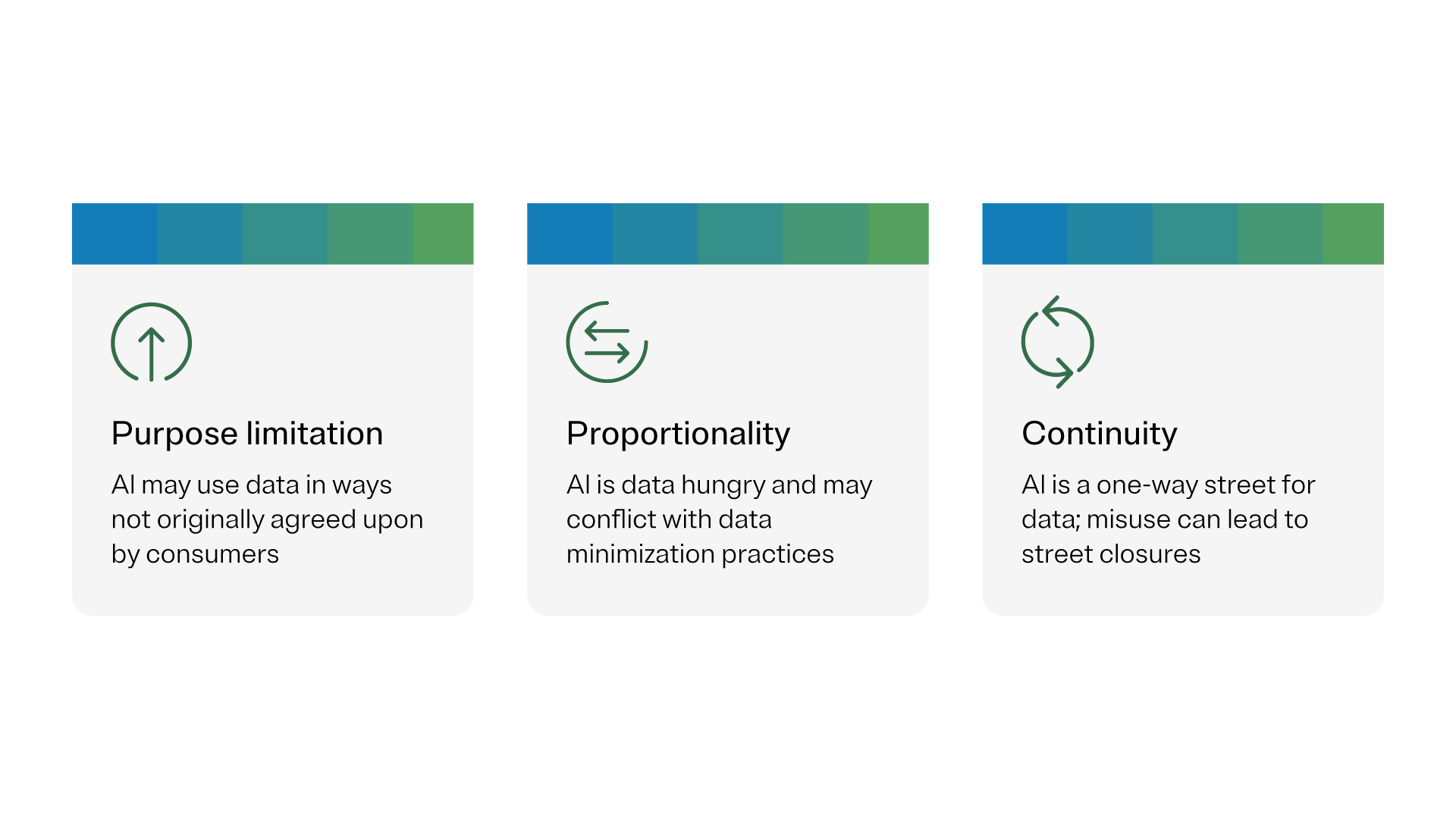

3 privacy pitfalls in AI adoption

That’s because AI is an amplifier of existing privacy gaps – a single misconfigured access point can get exponentially more problematic when its exposed to an AI system. When navigating the role of data privacy for AI systems, there are three privacy pitfalls to be aware of:

- Purpose limitation: AI incentivizes secondary uses of data, which means using data for a different purpose than it was originally intended for.

- Proportionality: AI requires robust datasets to ensure accuracy, fairness, and quality which may conflict with data minimization practices

- Business continuity: Data comes in, but it doesn’t go out – if you don’t put the right data in then someday that system won’t be available for you.

Here, we’ll explore each pitfall in more detail and look at some common practices for addressing the risks associated with it.

Purpose limitation in the context of AI

A common scenario that illustrates the importance of responsible data usage is the collection of birth dates for identity verification. In contexts such as two-factor authentication or banking, verifying a person's identity is crucial. This process often involves collecting sensitive information – like their birth date.

However, possessing this data for identity verification does not automatically grant permission to use it for other purposes. For instance, if a marketing team wants to use birth dates to send out birthday promotions, they must first obtain explicit consent from the individuals involved. Without such consent, using birth dates for marketing is a violation of data privacy principles.

Challenges with generative AI and consent

The advent of large language models (LLMs) and generative AI adds another layer of complexity to this issue.

Providing clear and detailed information upfront is essential for obtaining informed consent, meaning individuals must understand in plain language how their data will be used to provide informed consent.

One significant challenge organizations face is striking a balance between offering enough context and avoiding overwhelming individuals with lengthy terms and conditions that they might – and often do – simply skip. Effective communication in the audience's language is vital to ensure that consent is truly informed and not just a formality.

Proportionality and balancing data robustness and minimization in the context of AI

Consider the use of a resume scanning tool designed to streamline hiring practices. Historically, organizations might exclude sensitive information such as race, gender, and ethnicity from resumes to minimize privacy risks and reduce overall risk if the application were to experience a breach. However, excluding these data points can also prevent the identification and mitigation of bias within the hiring process.

Bias can persist even when sensitive data is omitted, as other indirect factors might contribute to biased outcomes. To accurately analyze and ensure fair representation in a dataset, it’s necessary to document and record sensitive data points. This allows for proactive monitoring of the system for fairness and the detection of potential biases.

A common challenge faced by organizations today is that they may not have collected race, gender, or ethnicity information initially due to privacy concerns or the potential discomfort it might cause applicants. Consequently, they lack the data needed to perform thorough fairness assessments.

Privacy-enhancing technologies (PETs)

To address these challenges, privacy-enhancing technologies (PETs) can be employed. PETs such as differential privacy, synthetic data, homomorphic encryption, and multi-party computation help protect sensitive inputs while enabling the necessary analysis. These technologies allow for the safeguarding of individual privacy during data processing and model training.

However, it is important to recognize that there is no one-size-fits-all solution. The choice of PET depends on the specific use case and the infrastructure in place. In many scenarios, a combination of multiple PETs may be required to adequately protect privacy while maintaining the utility of the data.

Business continuity and future-proofing data in the context of AI

For consent to be truly lawful, it must be freely given and able to be withdrawn at any time. This principle poses a significant challenge when a consumer requests the deletion of their data from a business-critical system. If these processes rely on AI systems trained on personal data, the removal of such data can disrupt business continuity.

AI models, much like human brains, can’t simply forget information once it has been learned. The only solution is to roll back to a previous version of the model, trained before the data in question was included, and then retrain the model without it.

This necessitates robust documentation on model versioning, dataset versioning, and detailed tracking of data categories and identifiers to ensure the data can be accurately removed.

Data governance and model retraining

The complexities associated with enforcing data governance and model retraining highlight the importance of thorough documentation and precise version control. This involves maintaining detailed logs of model versions, datasets, and the identifiers used to track individual data points. When a data subject revokes consent, these records allow for the targeted rollback and retraining of models.

Retrieval-augmented generation (RAG)

Given these data governance challenges, there is a compelling case for employing retrieval-augmented generation (RAG). RAG involves retrieving facts from an external knowledge base to ground LLMs in the most accurate and up-to-date information. This approach offers several benefits:

- Flexibility: External data sources used in RAG can be updated without the need to retrain the entire model.

- Accuracy: By retrieving information from trusted sources, RAG enhances factual accuracy and significantly reduces the chances of AI-generated hallucinations.

- Accessibility: RAG lowers the barrier to adopting customizable generative AI services as it doesn’t require advanced data science techniques.

- Specificity: RAG is well-suited for retrieving specific information to provide accurate answers.

- Cost: Using external knowledge as a prompt input is more cost-effective than fine-tuning the entire LLM.

By utilizing RAG, organizations can maintain control over the data input at the point of prompt rather than continuously retraining models. This approach helps ensure business continuity and compliance with data privacy regulations, even when individual data points are removed due to withdrawn consent.

You may also like

Webinar

Technology Risk & Compliance

Smarter TPRM in action: Using AI to drive efficiency and better risk outcomes

See how AI‑enabled TPRM transforms traditional vendor risk programs in this live demo, showcasing faster assessments, reduced manual effort, clearer risk insights, and smarter prioritization across the third‑party lifecycle to build a more scalable, outcome‑driven TPRM program.

March 25, 2026

Webinar

Technology Risk & Compliance

From regulation to resilience: Practical ways to operationalise NIS2

Learn how to operationalize NIS2 in this webinar, with practical guidance for applying requirements across third parties and critical suppliers, unifying teams, strengthening risk ownership, improving visibility, and using NIS2 to build stronger cybersecurity and operational resilience beyond compliance.

March 18, 2026

Webinar

Privacy Automation

Evolving privacy programs with AI: What’s new in the Winter ’26 Release

See a guided walk‑through of both foundational privacy automation capabilities and the newest Winter ’26 enhancements across the Privacy Automation Suite and AI Governance.

March 11, 2026

Webinar

Technology Risk & Compliance

TPRM in the age of AI: Managing risk while scaling smarter programs

Discover how AI is transforming third‑party risk management in this webinar, exploring governance of third‑party AI from intake to monitoring and how TPRM leaders use AI.

March 11, 2026

Webinar

AI Governance

Introducing the AI Governance Maturity framework

An introductory session on the OneTrust AI Governance Maturity Model that helps leaders benchmark their current state, understand the four maturity stages, and see how governance evolves from manual compliance to automated, lifecycle‑embedded enablement.

March 03, 2026

Webinar

Responsible AI

AI’s next leap: How first-party data and governance unlock personalization at scale

Discover how first-party data and governance unlock AI-powered personalization, bridging the gap from pilots to full-scale media transformation.

February 18, 2026

Infographic

Third-Party Risk

Accelerate growth: Uniting AI governance and risk management

AI risk can’t be managed in silos. Effective AI governance brings security, privacy, risk, and the business together to reduce exposure and accelerate innovation.

February 16, 2026

White Paper

AI Governance

The dream killer problem: Why traditional governance can’t keep up with AI

Learn what AI governance means today and how AI-Ready Governance provides a framework for managing risk while enabling responsible AI innovation.

February 05, 2026

In-Person Event

AI Governance

Risk on the road - Amsterdam

Discover how to safely implement and govern AI while managing risk and compliance in a complex, fast-changing regulatory landscape.

February 04, 2026

eBook

AI Governance

AI inventory essentials: Key components to AI governance success

Learn how airlines and automotive brands use consent-driven data models to build trust, enable personalization, and stay compliant.

February 03, 2026

White Paper

AI Governance

Governing AI in 2026: A global regulatory guide

Download “Governing AI in 2026”, a global regulatory guide for privacy and compliance teams navigating AI laws, enforcement trends, and operational AI governance requirements.

January 28, 2026

On-Demand

AI Governance

Data Privacy Day with OneTrust, featuring Forrester: What’s next for privacy in the age of AI

Join Forrester and OneTrust on Data Privacy Day for insights on the evolving privacy landscape and how AI is reshaping organizational expectations worldwide.

January 28, 2026

Webinar

AI Governance

Risk-ready AI: Embedding continuous risk reviews into your AI lifecycle

In this webinar, OneTrust AI Governance specialists will showcase how leading teams embed continuous risk reviews into design, development, and deployment so innovation doesn’t outpace oversight.

January 22, 2026

On-Demand

Privacy Automation

From compliance to impact: Embedding privacy across the tech lifecycle

In this webinar, learn how organizations operationalize privacy at scale by embedding privacy across the tech lifecycle.

January 20, 2026

In-Person Event

Privacy Automation

Data Privacy Day breakfast roundtable- Privacy in the age of AI

Discover how organisations are preparing for Data Privacy Day with practical insights on AI-driven privacy processes, compliance challenges, and future-proof strategies.

January 14, 2026

eBook

AI Governance

Why traditional risk frameworks fall short for AI

Discover how AI governance reshapes the Chief Data Officer’s role beyond data into security, compliance, architecture, and product strategy, and learn a 90-day strategy for AI governance.

January 08, 2026

Webinar

AI Governance

The AI Governance starter kit: Practical tools for compliance

Watch our webinar to cut through the noise and get practical answers. We’ll simplify the essentials of AI governance and show you how to take confident, compliant steps forward.

January 08, 2026

On-Demand

AI Governance

Operationalizing the Databricks AI Security Framework with OneTrust

Learn how OneTrust and Databricks operationalize the Databricks AI Security Framework (DASF) within AI Governance and GRC. Discover how this partnership bridges policy and execution, enabling security, risk, and AI teams to scale controls across your AI estate.

January 07, 2026

Report

AI Governance

OneTrust Recognized in the 2025 Gartner® Market Report for AI Governance Platforms

December 12, 2025

On-Demand

AI Governance

Accelerate innovation with AI governance: A live demo

Join our live demo to see how OneTrust AI Governance helps you inventory and manage AI responsibly, with built-in privacy, risk, and ethical controls—integrated into your existing workflows.

December 11, 2025

On-Demand

AI Governance

EU Digital Omnibus explained: GDPR, AI Act & ePrivacy changes

Discover the EU Digital Omnibus unveiled by the European Commission, updating GDPR, ePrivacy, and the AI Act. Learn about changes to data rights, DPIAs, cookie consent, incident reporting, and AI compliance in our expert panel discussion.

December 08, 2025

On-Demand

Risk-enabled innovation: Uniting AI governance and risk management to accelerate responsible growth

Join OneTrust’s CISO and Head of AI Governance & Privacy to uncover how risk and governance alignment drives responsible AI innovation.

December 04, 2025

On-Demand

Defining an AI agent policy: Governing the next wave of intelligent systems

In this webinar, OneTrust AI Governance specialists will show how leading teams embed continuous risk reviews into design, development, and deployment so innovation doesn’t outpace oversight.

December 02, 2025

White Paper

AI Governance

Launching an AI Governance Committee in 90 Days

Establishing an AI Governance Committee brings clarity, accountability and speed, helping your organization move faster with confidence.

December 01, 2025

On-Demand

AI Governance

One nation, many rules: Governing AI in the US regulatory maze

In this session, OneTrust AI Governance and Privacy specialists will explore how privacy teams can leverage the lessons learned from years of privacy program building to prepare for this next wave of AI regulation.

November 19, 2025

Infographic

Consent & Preferences

What's changing in consent with AI

Download What’s Changing in Consent with AI to learn how AI transforms consent and governance, what new data risks to address, and how marketing and privacy teams can turn trust into a driver of responsible personalization and innovation.

November 13, 2025

Checklist

AI Governance

AI Readiness Checklist for Privacy Leaders Checklist

Download the AI Readiness Checklist for Privacy Leaders to assess your organization’s governance maturity, align privacy and data teams, and build trust in responsible AI adoption through transparency, accountability, and compliance.

November 10, 2025

On-Demand

AI Governance

Navigating AI governance: Privacy compliance under Philippine law

Join this webinar to learn how the Philippines is activley sharing its AI Governance framework, with new guidelines from the NPC and updates to the DPA that impact how organisations deploy and manage AI systems.

November 06, 2025

On-Demand

AI Governance

How agentic AI is powering the next wave of financial services innovation

This session will explore how distributed and democratized AI tools are unlocking new opportunities for innovation, while raising critical questions around governance, ethics, and trust.

November 05, 2025

On-Demand

AI Governance

Making AI governance work: Practical insights and tools

Learn how to implement effective, scalable AI governance with insights from KPMG and OneTrust experts in this live webinar.

November 04, 2025

On-Demand

AI Governance

Preparing for implementation: Best practices to build your AI Governance tech stack

Accelerate AI governance deployment with expert tips. Learn to align with EU AI Act, ISO 42001 & NIST RMF. Get your roadmap to early success.

October 29, 2025

On-Demand

AI Governance

The global AI regulatory landscape: How privacy and compliance teams can stay ahead

This webinar will provide practical insights from the latest DataGuidance AI Report, helping you align AI practices with emerging standards and prepare for future regulatory scrutiny.

October 20, 2025

Infographic

AI Governance

AI Committee RACI Matrix: Roles, rights & responsibilities for Enterprise AI

Download the AI Committee RACI Matrix to define roles, rights, and responsibilities across the AI lifecycle and strengthen oversight in enterprise AI governance.

October 20, 2025

On-Demand

AI Governance

Operationalizing responsible AI with innovative governance: A live demo

In this demo webinar, you’ll see how OneTrust AI Governance orchestrates real-time oversight across the AI lifecycle—helping you manage risk, accelerate adoption, and align compliance without stalling innovation.

October 15, 2025

On-Demand

Privacy Automation

Global regulatory update: Q4 2025 privacy & data trends

Stay ahead of privacy and data protection in Q4 2025. Join OneTrust’s live update covering US state laws, EU AI Act readiness, children’s data, cross-border transfers, DORA, APAC trends, and key enforcement actions.

October 14, 2025

On-Demand

AI Governance

From chaos to control: Architecting your AI Governance stack

In this webinar, learn how AI architects can implement enterprise-wide governance. Discover strategies for aligning compliance, risk, and development workflows without slowing innovation.

October 01, 2025

On-Demand

AI Governance

EU AI Act in force: Key impacts and what's next

Learn what’s now enforceable under the EU AI Act. Join our webinar for key insights on GPAI rules, GDPR overlap, and compliance strategies.

September 15, 2025

Report

AI Governance

The 2025 AI-Ready Governance Report

Across 1,250 IT leader responses, one theme stands out — legacy governance can’t keep up with AI. See how teams are shifting their mindset.

September 08, 2025

Infographic

AI Governance

The future of AI-ready governance

AI is exposing the gaps in traditional governances. While technology has evolved at lightning speed, the tools and frameworks we use to manage it haven’t kept up.

September 07, 2025

On-Demand

AI Governance

Tech-enabled AI governance with KPMG trusted AI on OneTrust

Join this webinar with experts from OneTrust and KPMG to explore how enterprises can operationalize AI governance across people, processes, and platforms, shifting from reactive oversight to proactive assurance.

August 21, 2025

On-Demand

AI Governance

AI in B2B: Governance, risk & compliance at scale

Register now to hear our expert panel explore how AI adoption in B2B—across cybersecurity, supply chain management, and enterprise operations—is driving new privacy and compliance risks at speed.

August 14, 2025

eBook

Consent & Preferences

Building trust in the AI age: A guide to consent, privacy, and first-party data excellence

Learn how to build trust in the AI era with consent-first, privacy-focused strategies that maximize first-party data and ensure compliance.

August 12, 2025

On-Demand

Responsible AI

Strengthening data governance to power responsible AI

Join our webinar to explore how data leaders are strengthening AI governance with trusted data foundations, quality management, and transparent practices.

August 05, 2025

eBook

AI Governance

Building a future-ready AI governance program: Best practices, proven frameworks, and expert insights for operationalizing responsible AI

Build a future-ready AI governance program with expert insights, proven frameworks, and actionable steps to operationalize responsible AI at scale.

July 25, 2025

On-Demand

AI Governance

AI in B2C: Managing compliance, consent & consumer trust

Join our expert panel as we cover how businesses can create AI-driven experiences while maintaining compliance.

July 24, 2025

On-Demand

AI Governance

Leading the charge on trustworthy AI governance

Hear from experts on how AI program owners are operationalizing trustworthy AI governance and scaling responsible innovation.

July 23, 2025

Report

AI Governance

Discover how AI governance solutions are championing responsible AI at scale

OneTrust is recognized in The Forrester report, "The AI Governance Solutions Landscape, Q2 2025", among notable AI governance vendors. Our solution supports organizations that need scalable governance across technical and business teams.

July 23, 2025

White Paper

AI Governance

Operationalizing AI governance: How OneTrust powers oversight across the AI stack

Discover how OneTrust unifies policy, risk, and platform oversight to operationalize AI governance across your enterprise.

July 18, 2025

On-Demand

AI Governance

Global AI regulation: How to prepare for what’s now and what’s next

In this live discussion, privacy and legal experts will highlight comparisons and differences between enacted and emerging laws, and guide businesses on how to strike the right balance between compliance, risk mitigation, and innovation.

July 16, 2025

On-Demand

Responsible AI

Building AI governance with security at the center

Discover how security leaders are securing AI models, data, and infrastructure while driving risk-aware AI governance programs.

July 10, 2025

On-Demand

AI Governance

OneTrust Using OneTrust: Managing AI

Our internal strategies for governing AI applications responsibly, ensuring ethical use and compliance.

July 02, 2025

On-Demand

AI Governance

Bringing privacy to the AI table with data protection by design

Join our webinar to see how privacy professionals are embedding compliance and trust into AI governance programs from the start.

June 23, 2025

On-Demand

AI Governance

Forming the AI Committee: Roles and responsibilities for enterprise-wide governance

Learn how to form an AI Committee that aligns privacy, security, data, and AI teams for responsible, enterprise-wide AI governance.

June 05, 2025

On-Demand

Privacy Management

OneTrust Spring Release

We explore the latest release which introduces AI-assisted features that help privacy and third-party risk teams scale by reducing manual effort and friction, so they can focus on the work that matters most.

May 27, 2025

Webinar

AI Governance

Mastering cross-functional AI governance series

Join our AI Governance Committee webinar series to hear how cross-functional teams are building responsible AI governance across privacy, security, data, and AI.

May 21, 2025

On-Demand

AI Governance

Navigating the evolving US AI regulatory landscape: Key insights and implications for AI Governance

Join our webinar to learn how US AI regulations are evolving and how teams can strengthen and future-proof their AI governance strategy.

May 20, 2025

On-Demand

AI Governance

Navigating AI in business functions: Risk, responsibility & compliance

In this webinar, industry experts will explore key AI risks, regulatory considerations, and best practices for aligning AI initiatives with privacy, security, and ethical frameworks.

May 08, 2025

On-Demand

AI Governance

Responsible use of data & AI

Join the OneTrust Webinar for Northern European Countries on how to effectively govern data and AI with OneTrust’s integrated solution.

April 30, 2025

Report

AI Governance

State of data 2025: The now, the near, and the next evolution of AI for media campaigns

Discover how AI is transforming media campaigns. State of Data 2025 explores AI adoption, challenges, and strategies to optimize media planning and performance.

April 02, 2025

Checklist

AI Governance

Checklist for compliance with South Korea’s AI Basic Act

Ensure compliance with South Korea’s AI Basic Act using this step-by-step checklist. Get key requirements, risk management steps, and transparency guidelines.

March 24, 2025

eBook

AI Governance

South Korea's AI Basic Act: What businesses need to know and how to comply

Discover how South Korea’s AI Basic Act impacts businesses and get a step-by-step compliance checklist to ensure responsible AI adoption.

March 12, 2025

eBook

Responsible AI

Business rewards vs. security risks: A generative AI study

Download this study on Generative AI by OneTrust and ISMG and gain insights on how organizations are currently using AI and more.

March 11, 2025

Checklist

AI Governance

Essential checklist for responsible EU AI Act compliance

Download this EU AI Act checklist and gain insights on the Act's scope and methods in building a foundation for compliance.

February 13, 2025

On-Demand

AI Governance

Operationalizing the EU AI Act

In this webinar, we’ll explore how OneTrust helps organizations meet EU AI Act compliance by operationalizing AI governance frameworks.

January 28, 2025

eBook

AI Governance

Navigating the AI data landscape: A guide to building AI-ready infrastructure

Download our eBook to learn how to build AI-ready data infrastructure, tackle unstructured data, and meet generative AI's unique demands.

January 21, 2025

On-Demand

AI Governance

Automating metadata capture: Future-proofing data management for AI

This webinar will explore how automating metadata capture can streamline the management of unstructured data, making it AI-ready while ensuring data quality and security.

January 14, 2025

On-Demand

AI Governance

Overcoming the privacy pitfalls of GenAI (APAC)

Join us and learn about the data privacy risks of adopting GenAI and practical strategies on avoiding them.

January 08, 2025

White Paper

AI Governance

Operationalizing the EU AI Act with OneTrust: A playbook for implementation

January 02, 2025

On-Demand

AI Governance

ISMG hosted panel discussion: Generative AI in 2024 – Navigating business rewards and security risks

In this recorded panel discussion, experts from OneTrust, Forcepoint, Optiv, and Protiviti explore findings from ISMG’s recent global survey of over 400 business and cybersecurity professionals.

December 19, 2024

On-Demand

Responsible AI

Overcoming the privacy pitfalls of GenAI

This webinar will explore the key privacy pitfalls organizations face when implementing GenAI, focusing on purpose limitation, data proportionality, and business continuity.

December 10, 2024

Report

AI Governance

Into the age of AI – Lessons from the future

Discover how AI-Ready Governance is redefining accountability, risk, and innovation. Download the OneTrust 2026 Predictions Report to explore key insights shaping responsible AI leadership.

December 02, 2024

On-Demand

Data Use Governance

The new data landscape: Navigating the shift to AI-ready data

This webinar will explore the how AI is affecting the data landscape, focusing on how data teams can extend common data practices to support AI’s unique use of data.

November 12, 2024

Checklist

AI Governance

AI project intake workflow checklist

Download our AI Project Intake Checklist to guide thorough assessments and ensure secure, compliant, and effective AI project planning from start to finish.

November 06, 2024

eBook

AI Governance

How to build an AI project intake workflow that balances risk and efficiency

Download our guide to building an AI project intake workflow that balances risk and efficiency, complete with a checklist for thorough, informed assessments.

November 05, 2024

On-Demand

Navigating the top 5 data sharing challenges

This webinar will uncover the top 5 data sharing challenges organizations face and demonstrate how advanced data governance solutions can streamline processes, improve data quality, and enhance compliance, allowing organizations to discover the full potential of their data assets.

October 31, 2024

White Paper

AI Governance

How the EU AI Act and recent FTC enforcements for AI shape data governance

Download this white paper to learn how to adapt your data governance program, by defining AI-specific policies, monitoring data usage, and centralizing enforcement.

October 30, 2024

Report

Responsible AI

Getting Ready for the EU AI Act, Phase 1: Discover & Catalog, The Gartner® Report

Getting Ready for the EU AI Act, Phase 1: Discover & Catalog, The Gartner® Report

October 16, 2024

On-Demand

AI Governance

California's approach to AI: Unpacking new legislation

This webinar unpacks California’s approach to AI and emerging legislations, including legislation on defining AI, AI transparency disclosures, the use of deepfakes, generative AI, and AI models.

October 15, 2024

eBook

AI Governance

Establishing a scalable AI governance framework: Key steps and tech for success

Download this coauthored eBook by OneTrust and Protiviti to learn how organizations are building scalable AI governance models and managing AI risks.

October 01, 2024

Report

Privacy Automation

Discover the economic benefits of OneTrust

Download this 2024 Forrester Consulting Total Economic Impact™ study to see how OneTrust has helped organizations navigate data management complexities, generate significant ROI, and enable the responsible use of data and AI.

September 24, 2024

On-Demand

Privacy Automation

Global regulatory update: Recent privacy developments and compliance trends

Join us for a webinar on the latest updates and emerging trends in global privacy regulations.

September 12, 2024

eBook

AI Governance

Data and AI governance for responsible use of data

Learn why discovering, classifying, and using data responsibly is the only way to ensure your AI is governed properly.

September 12, 2024

eBook

AI Governance

Securing reliable AI solutions: Strategies for trustworthy procurement

Download this eBook to explore strategies for trustworthy AI procurement and learn how to evaluate vendors, manage risks, and ensure transparency in AI adoption.

September 11, 2024

On-Demand

AI Governance

From policy to practice: Bringing your AI Governance program to life

Join our webinar to gain practical, real-world guidance from industry experts on implementing effective AI governance.

September 10, 2024

On-Demand

AI Governance

Ensuring compliance and operational readiness under the EU AI Act

Join our webinar and learn about the EU AI Act's enforcement requirements and practical strategies for achieving compliance and operational readiness.

August 22, 2024

Video

AI Governance

OneTrust AI Governance demo video

Learn how OneTrust AI Governance acts as a unified program center for AI initiatives so you can build and scale your AI governance program

August 12, 2024

On-Demand

AI Governance

AI Governance in action: A live demo

Whether your AI is sourced from vendors and third parties or developed in-house, AI Governance supports informed decision-making and helps build trust in the responsible use of AI. Join the live demo webinar to watch OneTrust AI Governance in action.

August 06, 2024

On-Demand

AI Governance

AI governance masterclass miniseries: EU AI Act

Discover the EU AI Act's impact on your business with our video series on its scope, roles, and assessments for responsible AI governance and innovation.

July 31, 2024

On-Demand

Third-Party Risk

Third-Party AI: Procurement and risk management best practices

As innovation teams race to integrate AI into their products and services, new challenges arise for development teams leveraging third-party models. Join the webinar to gain insights on how to navigate AI vendors while mitigating third-party risks.

July 25, 2024

Resource Kit

Responsible AI

EU AI Act compliance resource kit

Download this resource kit to help you understand, navigate, and ensure compliance with the EU AI Act.

July 22, 2024

On-Demand

AI Governance

From build to buy: Exploring common approaches to governing AI

In this webinar, we'll navigate the intricate landscape of AI Governance, offering guidance for organizations whether they're developing proprietary AI systems or procuring third-party solutions.

July 10, 2024

eBook

AI Governance

Navigating the ISO 42001 framework

Discover the ISO 42001 framework for ethical AI use, risk management, transparency, and continuous improvement. Download our guide for practical implementation steps.

July 03, 2024

On-Demand

Privacy Management

Scaling to new heights with AI Governance

Join OneTrust experts to learn about how to enforce responsible use policies and practice “shift-left” AI governance to reduce time-to-market.

June 25, 2024

On-Demand

AI Governance

AI Governance Leadership Webinar: Best Practices from IAPP AIGG with KPMG

Join out webinar to hear about the challenges and solutions in AI governance as discussed at the IAPP conference, featuring insights and learnings from our industry thought leadership panel.

June 18, 2024

On-Demand

AI Governance

Colorado's Bill on AI: Protecting consumers in interactions with AI systems

Colorado has passed landmark legislation regulating the use of Artificial Intelligence (AI) Systems. In this webinar, our panel of experts will review best practices and practical recommendations for compliance with the new law.

June 11, 2024

On-Demand

AI Governance

Governing data for AI

In this webinar, we’ll break down the AI development lifecycle and the key considerations for teams innovating with AI and ML technologies.

June 04, 2024

Report

AI Governance

GRC strategies for effective AI Governance: OCEG research report

Download the full OCEG research report for a snapshot of what organizations are doing to govern their AI efforts, assess and manage risks, and ensure compliance with external and internal requirements.

May 22, 2024

Report

AI Governance

Global AI Governance law and policy: Jurisdiction overviews

In this 5-part regulatory article series, OneTrust sponsored the IAPP to uncover the legal frameworks, policies, and historical context pertinent to AI governance across five jurisdictions: Singapore, Canada, the U.K., the U.S., and the EU.

May 08, 2024

On-Demand

AI Governance

Embedding trust by design across the AI lifecycle

In this webinar, we’ll look at the AI development lifecycle and key considerations for governing each phase.

May 07, 2024

On-Demand

AI Governance

Navigating AI policy in the US: Insights on the OMB Announcement

This webinar will provide insights for navigating the pivotal intersection of the newly announced OMB Policy and the broader regulatory landscape shaping AI governance in the United States. Join us as we unpack the implications of this landmark policy on federal agencies and its ripple effects across the AI ecosystem.

April 18, 2024

On-Demand

AI Governance

Data privacy in the age of AI

In this webinar, we’ll discuss the evolution of privacy and data protection for AI technologies.

April 17, 2024

Resource Kit

AI Governance

OneTrust's journey to AI governance resource toolkit

What actually goes into setting up an AI governance program? Download this resource kit to learn how OneTrust is approaching our own AI governance, and our experience may help shape yours.

April 11, 2024

On-Demand

AI Governance

AI in (re)insurance: Balancing innovation and legal challenges

Learn the challenges AI technology poses for the (re)insurance industry and gain insights on balancing regulatory compliance with innovation.

March 14, 2024

On-Demand

Privacy Management

Fintech, data protection, AI and risk management

Watch this session for insights and strategies on buiding a strong data protection program that empowers innovation and strengthens consumer trust.

March 13, 2024

On-Demand

Privacy Management

Managing cybersecurity in financial services

Get the latest insights from global leaders in cybersecurity managment in this webinar from our Data Protection in Financial Services Week 2024 series.

March 12, 2024

On-Demand

AI Governance

Government keynote: The state of AI in financial services

Join the first session for our Data Protection in Financial Services Week 2024 series where we discuss the current state of AI regulations in the EU.

March 11, 2024

White Paper

AI Governance

Getting started with AI governance: Practical steps and strategies

Download this white paper to explore key drivers of AI and the challenges organizations face in navigating them, ultimately providing practical steps and strategies for setting up your AI governance program.

March 08, 2024

On-Demand

AI Governance

Revisiting IAPP DPI Conference – Key global trends and their impact on the UK

Join OneTrust and PA Consulting as they discuss key global trends and their impact on the UK, reflecting on the topics from IAPP DPI London.

March 06, 2024

On-Demand

AI Governance

AI regulations in North America

In this webinar, we’ll discuss key updates and drivers for AI policy in the US; examining actions being taken by the White House, FTC, NIST, and the individual states.

March 05, 2024

In-Person Event

Responsible AI

Data Dialogues: Implementing Responsible AI

Learn how privacy, GRC, and data professionals can assess AI risk, ensure transparency, and enhance explainability in the deployment of AI and ML technologies.

February 23, 2024

On-Demand

AI Governance

Global trends shaping the AI landscape: What to expect

In this webinar, OneTrust DataGuidance and experts will examine global developments related to AI, highlighting key regulatory trends and themes that can be expected in 2024.

February 13, 2024

eBook

Privacy Management

Understanding the Data Privacy Maturity Model

Data privacy is a journey that has evolved from a regulatory compliance initiative to a customer trust imperative. This eBook provides an in-depth look at the Data Privacy Maturity Model and how the business value of a data privacy program can realised as it matures.

February 07, 2024

On-Demand

AI Governance

The EU AI Act

In this webinar, we’ll break down the four levels of AI risk under the AI Act, discuss legal requirements for deployers and providers of AI systems, and so much more.

February 06, 2024

On-Demand

Responsible AI

Preparing for the EU AI Act: Part 2

Join Sidley and OneTrust DataGuidance for a reactionary webinar to unpack the recently published, near-final text of the EU AI Act.

February 05, 2024

Data Sheet

Privacy Automation

An overview of the Data Privacy Maturity Model

Data privacy is evolving from a regulatory compliance initiative to a customer trust imperative. This data sheet outlines the four stages of the Data Privacy Maturity Model to help you navigate this shift.

February 05, 2024

Checklist

AI Governance

Questions to add to existing vendor assessments for AI

Managing third-party risk is a critical part of AI governance, but you don’t have to start from scratch. Use these questions to adapt your existing vendor assessments to be used for AI.

January 31, 2024

On-Demand

AI Governance

Getting started with AI Governance

In this webinar we’ll look at the AI Governance landscape, key trends and challenges, and preview topics we’ll dive into throughout this masterclass.

January 16, 2024

On-Demand

AI Governance

First Annual Generative AI Survey: Business Rewards vs. Security Risks Panel Discussion

OneTrust sponsored the first annual Generative AI survey, published by ISMG, and this webinar breaks down the key findings of the survey’s results.

January 12, 2024

Report

AI Governance

ISMG's First annual generative AI study - Business rewards vs. security risks: Research report

OneTrust sponsored the first annual ISMG generative AI survey: Business rewards vs. security risks.

January 04, 2024

On-Demand

AI Governance

Building your AI inventory: Strategies for evolving privacy and risk management programs

In this webinar, we’ll talk about setting up an AI registry, assessing AI systems and their components for risk, and unpack strategies to avoid the pitfalls of repurposing records of processing to manage AI systems and address their unique risks.

December 19, 2023

On-Demand

Responsible AI

Preparing for the EU AI Act

Join Sidley and OneTrust DataGuidance for a reactionary webinar on the EU AI Act.

December 14, 2023

On-Demand

Consent & Preferences

Marketing Panel: Balance privacy and personalization with first-party data strategies

Join this on-demand session to learn how you can leverage first-party data strategies to achieve both privacy and personalization in your marketing efforts.

December 04, 2023

On-Demand

AI Governance

Revisiting IAPP DPC: Top trends from IAPP's privacy conference in Brussels

Join OneTrust and KPMG webinar to learn more about the top trends from this year’s IAPP Europe DPC.

November 28, 2023

White Paper

Responsible AI

EU AI Act Conformity Assessment: A step-by-step guide

Conformity Assessments are a key and overarching accountability tool introduced by the EU AI Act. Download the guide to learn more about the Act, Conformity Assessments, and how to perform one.

November 17, 2023

eBook

AI Governance

Navigating the EU AI Act

With the use of AI proliferating at an exponential rate, the EU rolled out a comprehensive, industry-agnostic regulation that looks to minimize AI’s risk while maximizing its potential.

November 17, 2023

On-Demand

Responsible AI

OneTrust AI Governance: Championing responsible AI adoption begins here

Join this webinar demonstrating how OneTrust AI Governance can equip your organization to manage AI systems and mitigate risk to demonstrate trust.

November 14, 2023

White Paper

AI Governance

AI playbook: An actionable guide

What are your obligations as a business when it comes to AI? Are you using it responsibly? Learn more about how to go about establishing an AI governance team.

October 31, 2023

Infographic

AI Governance

The Road to AI Governance: How to get started

AI Governance is a huge initiative to get started with for your organization. From data mapping your AI inventory to revising assessments of AI systems, put your team in a position to ensure responsible AI use across all departments.

October 06, 2023

White Paper

AI Governance

How to develop an AI governance program

Download this white paper to learn how your organization can develop an AI governance team to carry out responsible AI use in all use cases.

October 06, 2023

eBook

Responsible AI

AI Chatbots: Your questions answered

We answer your questions about AI and chatbot privacy concerns and how it is changing the global regulatory landscape.

August 08, 2023

Webinar

Responsible AI

Unpacking the EU AI Act and its impact on the UK

Prepare your business for EU AI Act and its impact on the UK with this expert webinar. We explore the Act's key points and requirements, building an AI compliance program, and staying ahead of the rapidly changing AI regulatory landscape.

July 12, 2023

On-Demand

Responsible AI

AI, chatbots and beyond: Combating the data privacy risks

Prepare for AI data privacy and security risks with our expert webinar. We will delve into the evolving technology and how to ensure ethical use and regulatory compliance.

June 27, 2023

On-Demand

AI Governance

The EU's AI Act and developing an AI compliance program

Join Sidley and OneTrust DataGuidence as we discuss the proposed EU AI Act, the systems and organizations that it covers, and how to stay ahead of upcoming AI regulations.

May 30, 2023

White Paper

AI Governance

Data protection and fairness in AI-driven automated data processing applications: A regulatory overview

With AI systems impacting our lives more than ever before, it's crucial that businesses understand their legal obligations and responsible AI practices.

May 15, 2023

White Paper

AI Governance

Navigating responsible AI: A privacy professional's guide

Download our white paper and learn how privacy teams help organizations establish and implement policies that ensure AI applications are responsible and ethical.

May 03, 2023

On-Demand

AI Governance

AI regulation in the UK – The current state of play

Join OneTrust and their panel of experts as they explore Artificial Intelligence regulation within the UK, sharing invaluable insights into where we are and what’s to come.

March 20, 2023

Webinar

AI Governance

AI governance masterclass

Navigate global AI regulations and identify strategic steps to operationalize compliance with the AI governance masterclass series.

Webinar

AI Governance

Data Protection in Financial Services Week 2024 series

OneTrust DataGuidance and Sidley are joined by industry experts for the annual Data Protection in Financial Services Week.

Regulation Book

AI Governance

AI Governance: A consolidated reference

Download this reference book and have foundational AI governance documents at your fingertips as you position your organization to meet emerging AI regulations and guidelines.

Webinar

Privacy & Data Governance

AI Governance Maturity Framework webinar series

Join OneTrust and several of our partners for webinars focusing on the fundamentals of building effective privacy programs.

In-Person Event

Privacy & Data Governance

AI in action roadshow l PA Consulting

Join OneTrust and PA for an AI governance roundtable over lunch in a city near you. Learn how firms use AI to manage AI and automate compliance for 2026.

Webinar

Technology Risk & Compliance

Risk in 30'

Join our risk management webinar series to learn how AI, NIS2, and modern TPRM practices help teams reduce manual work and strengthen resilience.